Standardization Study of the BNI Affect Test

Susan R. Borgaro, PhD

Division of Neurology, Barrow Neurological Institute, St. Joseph’s Hospital and Medical Center, Phoenix, Arizona

Abstract

Disturbances in affect often follow brain injury although they are seldom the focus of a neuropsychological examination. Several tests have been developed to assess affect, but most have serious methodological limitations and lack standardization with normal subjects. Their clinical utility is thereby limited. This study describes the standardization results from 200 healthy normal individuals on the BNI Affect Test. Overall, females performed better than males and younger subjects performed better than older subjects. All subjects scored the lowest on the Facial Perception subtest and on items reflecting anger. The results of this study provide the basis for clinical and comparative studies on affect disturbances in patients with brain dysfunction.

Key Words: affect, expressed emotion, neuropsychology

Affect disturbances have been documented in many neurological conditions, including but not limited to patients with a cerebrovascular accident (CVA), traumatic brain injury (TBI), Parkinson’s disease, Alzheimer’s disease, spinal cord injury, and multiple sclerosis. [4-8,10,21,35,37,38,41,45,48] Numerous studies have consistently supported the potential dominant role of the right hemisphere in processing affective stimuli, particularly when the lesion is located posteriorly.[2,16,25,36,47] Patients with an injured right cerebral hemisphere have experienced impairments in the auditory and visual perception and recognition of facial expressions;[14,15,22,28,32,42,43] the generation of behavioral expressions;[13,39] the perception, generation, and comprehension of affective tone;[20,26,27,31,32,40,46] and the appreciation of humorous stimuli.[3]

Affect is expressed through a combination of verbal and nonverbal functions that allow communication and that facilitate interpersonal communication. When affective communication is impaired, the ability to perceive emotional cues, to produce prosody, and to discriminate emotional situations may be compromised significantly. Such impairments can cause difficulties in interpreting situational and interpersonal cues, possibly contributing to inappropriate interactions, diminished communication, and withdrawal from interpersonal interactions. Consequently, such disturbances are likely to have important implications for treatment, particularly when combined with other difficulties involving communication and cognition (e.g., speech/language impairment).[7]

Several tests have been developed to assess affective functioning, but they are best regarded as research tools. Many have methodological limitations in terms of normative and clinical data (e.g., lack norms, standardization, and reliability) or are limited structurally (e.g., assess a single mode of affect) or both.[9] The BNI Screen for Higher Cerebral Functions[35] is the only neuropsychological test to include a subtest of items that evaluates patients for disturbances in affect.

Several studies have documented disturbances in affect using the BNI Screen. For example, Zigler and Prigatano[48] found disturbances in perception of facial expression in a heterogenous group of acute brain dysfunctional patients. In this study, 40% of the sample had difficulties in perceiving facial affect compared to only 3% of normal controls. Borgaro and Prigatano[6] found that affect disturbances in acute TBI patients were just as prominent during early recovery as memory and awareness deficits, regardless of the severity of injury. In a similar study,[5] the same authors found that patients with severe TBIs had the greatest difficulties in perceiving and generating spontaneous affect. Recently, Borgaro and colleagues[7] compared performances on affect items in patients with CVAs and TBIs. Both groups had significant difficulty generating affective communication during early recovery although patients in the CVA group had more difficulty in interpreting and perceiving affective stimuli than TBI patients.

The BNI Affect Test provides a more extensive examination of affective disturbances after brain injury than can be achieved with a screening test. It was developed to assess affect with respect to individual facial perceptions and interpersonal interactions and to discriminate between different emotional expressions. This article introduces the BNI Affect Test and describes the results of a standardization study of the test obtained on a large sample of normal controls.

Methods

Subjects

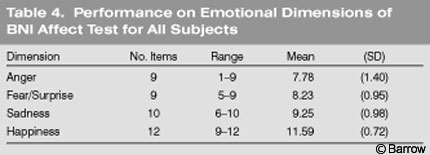

Participants were 200 normal control subjects (100 males,100 females) recruited from the general population. Their mean age was 51.1 years (standard deviation [SD]=13.1 years), and their mean level of education was 14.67 years (SD=2.60 years,Table 1). There was no significant difference in age by gender (t [200]=1.71, p =.088) although males (mean = 52.65 years, SD = 12.61 years) tended to be older than females (mean = 49.50 years, SD = 13.34 years). The mean level of education in the entire group was 14.7 years (SD = 2.6 years). Six subjects had fewer than 12 years of school, 59 had graduated from high school, 76 had “some” college, and 87 had a college degree. The educational status of two subjects was unavailable.

Participants were 200 normal control subjects (100 males,100 females) recruited from the general population. Their mean age was 51.1 years (standard deviation [SD]=13.1 years), and their mean level of education was 14.67 years (SD=2.60 years,Table 1). There was no significant difference in age by gender (t [200]=1.71, p =.088) although males (mean = 52.65 years, SD = 12.61 years) tended to be older than females (mean = 49.50 years, SD = 13.34 years). The mean level of education in the entire group was 14.7 years (SD = 2.6 years). Six subjects had fewer than 12 years of school, 59 had graduated from high school, 76 had “some” college, and 87 had a college degree. The educational status of two subjects was unavailable.

Only patients with no psychiatric history or neurologic disease were enrolled in the study.

Materials

The BNI Affect Test consists of 43 laminated, 8- x 11-inch cards joined by a ring binder. The test is administered manually and was designed for individual administration. There is no time limit. The test includes a brief screen (i.e., Perception Screen) and four subtests: (1) Facial Perception, (2) Facial Matching,(3) Interpersonal Perception, and (4) Facial Discrimination.

The Perception Screen consists of three items, and each of the four subtests consists of 10 items. The test assesses four universal dimensions of emotion: happiness (12 items), sadness (10 items), anger (9 items), and fear/surprise (9 items). Fear and surprise items were combined into a single emotional dimension because there was little discrimination between the two emotions. That is, 47% of subjects identified the fear stimuli as surprise and 46% of subjects identified the surprise item as fear. This finding is consistent with other research.[19]

The three items of the Perception Screen were designed to assess a subject’s ability to identify facial pictures visually without imposing demands on visual perception or recognition. It is intended to identify subjects with a visual disturbance that would preclude them from completing the test accurately. Each item consists of three 3.25- x 3.25-inch photographs of the faces of three different people aligned horizontally across the top of the page. At the bottom of the page, another 3.25- x 3.25-inch photograph is identical to one of the pictures on the top of the page. The subject is asked to indicate which picture on the top of the page matches the picture on the bottom of the page. If a subject fails any of the three items, the test is not administered because subjects may have a visual disturbance that would impair their performance.

The Facial Perception subtest is designed to measure a patient’s ability to perceive and interpret facial affect. Each item consists of a single 5.5- x 6.5-inch photograph of a face of a person positioned in the center of the page. The subject is asked to identify the emotion that the person in the picture is expressing.

The Facial Matching subtest is designed to assess a patient’s ability to identify an emotional facial expression correctly. Each item consists of three 3- x 3-inch photographs of three different people, each displaying a different expression. Their pictures are aligned horizontally across the top of the page. On the bottom center of the page is another 3.25- x 3.25-inch photograph of one of the same people presented at the top of the page but with a different facial expression. The subject is asked to match the emotional expression on the bottom of the page to the same emotional expression displayed at the top of the page.

The Interpersonal Perception subtest is designed to assess a patient’s ability to interpret or perceive affective stimuli within the context of an interpersonal interaction between two people. Each item of this subtest consists of a single 10- x 7.5-inch photograph showing an interaction between two people. The picture is designed to reflect an emotional interaction, and subjects are asked to identify the emotion.

The Facial Discrimination subtest is designed to assess a patient’s ability to identify a specific facial expression among three different choices. Each item consists of three 3.25- x 3.25-inch photographs of three different people, each displaying a different emotion. The three pictures are aligned horizontally across the middle of the page. The subject is asked to indicate which picture is displaying a specific emotion.

For each subtest, a score of one is assigned for each correct response; a score of 0 is assigned for an incorrect response. Responses are recorded verbatim on the answer sheet. The total number of possible correct responses is 40. Adding the total score from each of the four subtests generates a Total Raw score. The Total Raw score can be converted to a Total T score for clinical interpretation. A Total Raw score for each subtest can also be converted into separate T scores for the interpretation of individual subtests.

Procedures

Each participant completed a brief demographic form and was then administered the BNI Affect Test. The test took about 10 minutes to administer to each subject.

Analysis

Differences on the BNI Affect Total score and individual subtests for gender were analyzed using independent t tests. Pearson correlation coefficients were calculated between age and BNI Affect Test Total score. Analysis of variance (ANOVA) was calculated for the Total score and subtest scores across three age groups (19 to 39 years old, 40 to 59 years old, and 60 to 78 years old) and across gender.

Results

Total and Subtest Scores on BNI Affect Test

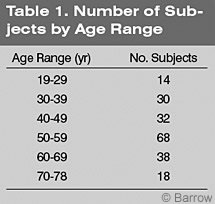

For the entire sample, the lowest mean score was obtained on the Facial Perception subtest, followed by the Interpersonal Perception subtest, Facial Matching subtest, and Facial Discrimination subtest (Table 2).

Males had significantly lower Total scores than females on the BNI Affect Test [t [200]=-4.11, p =.000]. Males scored significantly lower than females on the Facial Perception (p < .01),Facial Matching (p < .05),and Facial Discrimination (p < .01) subtests (Table 3). There were no differences between the scores of males and females on the Interpersonal Perception subtest.

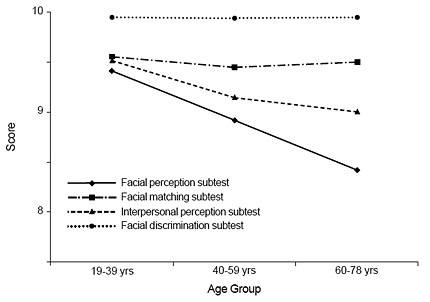

For the entire sample, the correlation between age and the Total score on the BNI Affect Test was significant (r = -.324, p =.000), suggesting that performance decreases as age increases (Fig. 1). The 19- to 39-year-old and 40- to 59-year-old groups had significantly higher Total scores than the 60- to 78-year-old group (F [1,198] = 9.79, p = .000). The youngest two groups of females performed significantly better than the older group of females (F [2,98] = 4.12, p = .019). The 19- to 39-year-old group of males performed significantly better than the 60- to 78-year-old group of males (F [2,98] = 4.30, p =.016).

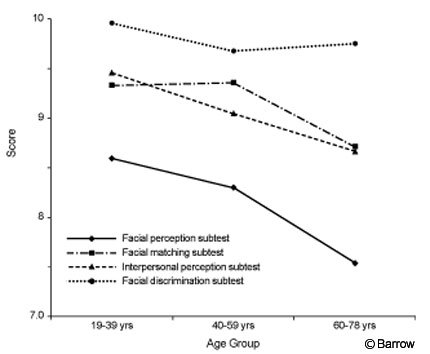

The 19- to 39-year-old females performed significantly better on the Facial Perception subtest than the 60- to 78-year-old females (F [2,98] = 5.68, p = .005; Fig.2). Among the males, there were no significant differences in subtests scores across age groups, although differences between the youngest and oldest age groups on the Facial Perception subtest approached significance (F [1,98] = 2.80, p = .066; Fig. 3).

Figure 3. Performance of males on subtests of the BNI Affect Test by age group.

Between-subjects analysis revealed significant main effects for gender (F [1,194] = 13.43, p =.000) and age group on the Total score of the BNI Affect Test (F [2,194] = 7.40, p =.001) although there was no significant gender-by-age group interaction (F [2,194] = .829, p = .438). There were significant interactions on the Facial Perception (F [2,194] = .165, p = .848), Facial Matching (F [2,194] = 1.73, p = .179), Interpersonal Perception (F [2,194] = .190, p = .827), or Facial Discrimination (F [2,194] = .462, p = .631) subtests.

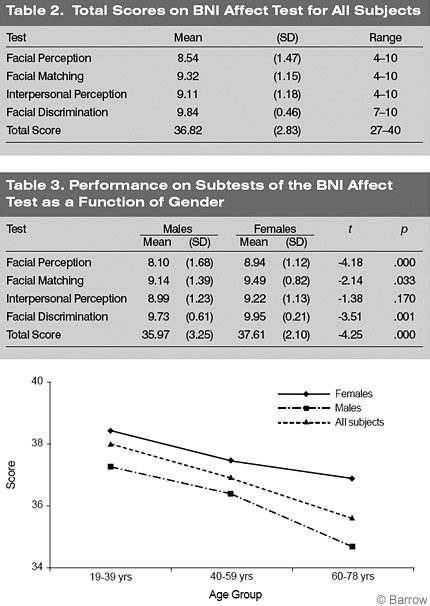

Performances on Emotional Dimensions

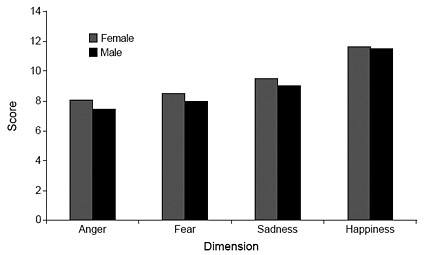

The lowest mean score was obtained on items reflecting anger, followed by the fear/surprise, sadness, and happiness items (Table 4). Males performed significantly lower than females on the anger (t [200] = -3.07, p = .002), fear (t [200] = -3.41, p =.001), and sadness (t [200]= -3.69, p =.000) items (Fig. 4). No significant gender differences were observed on the happiness dimension.

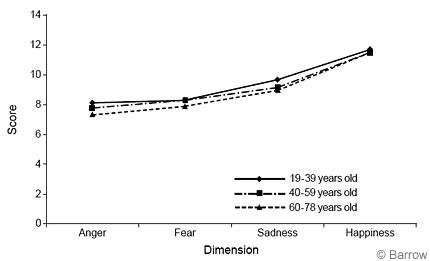

ANOVAs were conducted to determine if responses on each of the emotional dimensions (i.e., fear, anger, sadness, happiness) differed across age groups (Fig.5). The 19- to 39-year-old group performed significantly better on the anger dimension than the 60- to 78- year-old group (F [2,198] = 5.28, p =.006). The 19- to 39-year-old and the 40- to 59-year-old groups performed significantly better than the 60- to 78-yearold group on the fear dimension (F [2,198] = 5.07, p = .007). The 19- to 39- year-old group performed significantly better than the 40- to 59-year-old and 60- to 78-year-old groups on the sadness dimension (F [2,198] = 7.89, p = .001). No differences were observed on the dimensions reflecting happiness (F [2,198] = 1.38, p = .255).

Males 19 to 39 and 40 to 59 years old performed significantly better than males in the 60- to 78-year-old group on the anger dimension (F [2,98] = 4.32, p = .016). The youngest group of males also performed significantly better than the oldest group on the sadness dimension (F [2,98] = 4.53, p =.013). The 19- to 39-year-old females performed significantly better than the 40- to 59-yearold females on the sadness dimension (F [2,98] = 3.15, p =.047).

Between-subjects factorial analyses revealed no significant interactions between age group and gender on any of the emotional dimensions.

Discussion

The results of this standardization study described the demographic characteristics of 200 healthy subjects and their patterns of performance on the BNI Affect Test. Overall, females performed better than males and younger subjects performed better than older subjects. For all subjects, scores on the Facial Perception subtest and on items reflecting anger were the lowest.

Patterns of Performance: BNI Affect Test Total Score

Performance on the BNI Affect Test varied with gender: Females performed better than males. These findings are consistent with other research. Several studies have documented that women are more emotionally expressive and reactive and that they are better decoders of emotional stimuli than men.[1,11,12,17,24,29,34] The higher performance of females compared to males has been associated with higher ratings of extroversion and sympathy[34] and with greater regional blood flow in the anterior limbic regions of the brain on positron emission tomography.[23]

The BNI Affect Test also discriminated age differences: Younger subjects performed better than older subjects. Specifically, the 19- to 39- and 40- to 59- year-old groups performed significantly better than the 60- to 78-year-old group. These findings are consistent with the results of other studies.[30,33,44] Older subjects tend to perceive and experience more intense emotional responses than younger subjects, but their perceptions are less accurate than those of younger subjects.[24,30]

Females in the 19- to 39- and 40- to 59-year-old groups performed significantly better than females in the 60- to 78-year-old group. This finding suggests that affect performance, as measured by the BNI Affect Test, may not begin to decline in females until after the age of 60 years. For males, the youngest group (i.e., 19 to 39 years old) performed significantly better than the oldest group of males (i.e., 60 to 78 years old).

On the emotional dimensions, the highest mean score was obtained on items reflecting happiness, followed by sadness, fear/surprise, and anger. This pattern of performance was true across age and gender although females performed better than males and younger subjects performed better than older subjects on all of the emotional dimensions. The happiness stimulus was perceived accurately by 70% of the sample, the sadness stimuli by 54%,the fear/surprise stimulus by 48%, and the anger stimulus by 36%. The pattern of performance observed across emotional dimensions is consistent with other research that has shown that healthy subjects perceive positive emotions (e.g., happiness) more easily than negative emotions (e.g., fear, anger).[18]

At least six universal facial expressions have been identified across cultures (i.e., happiness, sadness, fear, surprise, anger, and disgust). Ekman and Friesen[19] identified happy, angry, and fearful (or surprised) as among the most basic universal facial emotions. They found that the accuracy rate of healthy subjects from New Guinea for perceiving happy faces was 95% to 100%; their accuracy rate of perceiving angry faces was 67% to 96% and that of perceiving fearful faces was 54% to 85%. Fearful faces were often misperceived as reflecting surprise, a finding that was also observed in the present study. In the present study, percentages across dimensions were lower than those reported by Ekman and Friesen.[19] However, stimuli pictures on the BNI AffectTest were not limited to facial expressions. They also included interpersonal interactions and discrimination tasks, which may account for some of the variability.

Patterns of Performance: Subtest Scores

Both males and females obtained the highest scores on the Facial Discrimination subtest, followed by the Facial Matching, Interpersonal Perception, and Facial Perception subtests. On the Facial Discrimination subtest, 87.5% of subjects answered all items correctly. In contrast, only 33.5% of the subjects answered all items correctly on the Facial Perception subtest. Significant age differences were observed among females on the Facial Perception subtest only. The 19- to 39-year-old group performed significantly better than the 60-to 78-year-old group. There were no significant age differences among males on any of the subtests, although differences on the Facial Perception subtest approached significance at (p = .06). Thus, the Facial Perception subtest appears to be the most difficult subtest on the BNI Affect Test, and the Facial Discrimination subtest appears to be the easiest.

Unlike the Facial Perception subtest, which requires subjects to identify a facial expression, the Facial Discrimination subtest provides choices for identifying a specific emotional expression. It provides structure and cues and should therefore produce the highest scores. Given the pattern of performance observed among healthy normal control subjects, it is expected that brain dysfunctional patients will also score highest on the Facial Discrimination subtest. Whether their performance on this subtest will be impaired relative to the performance of normal control subjects, however, remains to be examined.

Females performed better than males on all of the subtests, with the exception of the Interpersonal Perception subtest on which there was no difference. The lack of gender difference on this subtest may reflect the context within which the affect is displayed. The Interpersonal Perception subtest requires decoding a social interaction rather than decoding individual facial affect. Interpretation of affect in social situations may be less ambiguous than the interpretation of individual facial affect because such interactions provide interpersonal cues and rely less on individual emotional experiences. Thus, while females may decode individual emotional experiences better than males, both females and males may be able to decode emotional stimuli equally well when presented within the context of an interpersonal interaction. This area of research will be particularly important in the study of brain dysfunctional patients whose interpersonal skills are often compromised after brain injury.

Normative data from this study permit interpretation and comparative studies of the clinical performance of brain dysfunctional patients. Studies are in progress to document the reliability and validity of the test and to demonstrate its sensitivity and clinical utility with brain-injured patients.

Acknowledgments

This research was supported in part by grants from the Barrow Neurological Foundation and the Bertelsmann Foundation in Munster, Germany awarded to Susan Borgaro, PhD.

The author acknowledges George Prigatano, PhD, and Julie Ward for their support and assistance in the development of the BNI Affect Test. Carol Wheelis helped recruit subjects for the development of the test, and Elizabeth Downey assisted with data collection.

References

- Ashmore RD: Sex, gender, and the individual, in Pervin LA (ed): Handbook of Personality: Theory and Research. New York: Guilford Press, 1990, pp 486-526

- Benton AL: The neuropsychology of facial recognition. Am Psychol 35:176-186, 1980

- Bihrle AM, Brownell HH, Powelson JA, et al: Comprehension of humorous and nonhumorous materials by left and right brain-damaged patients. Brain Cogn 5:399-411, 1986

- Borgaro SR, Caples H, Prigatano GP, et al: Errors in facial perception following acute spinal cord injury using the BNI Screen. SCI Psychosocial Process 16:147-153, 2003

- Borgaro SR, Prigatano GP: Affective disturbances after acute, severe traumatic brain injury as measured by the BNI Screen for Higher Cerebral Functions. BNI Quarterly 17(4):14-17, 2002

- Borgaro SR, Prigatano GP: Early cognitive and affective sequelae of traumatic brain injury: A study using the BNI Screen for Higher Cerebral Functions. J Head Trauma Rehabil 17:526-534, 2002

- Borgaro SR, Prigatano GP, Kwasnica C, et al: Disturbances in affective communication following brain injury. Brain Inj 18:33-39, 2004

- Borod JC, Bloom RL, Hayward CS: Verbal aspects of emotional communication, in Beeman M, Chiarello C (eds): Right Hemisphere Language Comprehension: Perspectives from Cognitive Neuroscience. Mahwah, NJ: Lawrence Erlbaum Associates, 1998, pp 285-307

- Borod JC, Tabert MH, Santschi C, et al: Neuropsychological assessment of emotional processing in brain-damaged patients, in Borod JC (ed): The Neuropsychology of Emotion. New York: Oxford University Press, 2000, pp 80-105

- Borod JC: Interhemispheric and intrahemispheric control of emotion: A focus on unilateral brain damage. J Consult Clin Psychol 60:339-348, 1992

- Brody LR: Gender differences in emotional development: A review of theories and research. J Personality 53:102-149, 1985

- Brody LR, Hall JA: Gender and emotion, in Lewis M, Haviland JM (eds): Handbook of Emotions. New York: Guilford Press, 1993, pp 447-460

- Buck R, Duffy RJ: Nonverbal communication of affect in brain-damaged patients. Cortex 16:351-362, 1980

- Cicone M, Wapner W, Gardner H: Sensitivity to emotional expressions and situations in organic patients. Cortex 16:145-158, 1980

- DeKosky ST, Heilman KM, Bowers D, et al: Recognition and discrimination of emotional faces and pictures. Brain Lang 9:206-214, 1980

- De Renzi E, Spinnler H: Facial recognition in brain-damaged patients: An experimental approach. Neurology 16:145-152, 1966

- Dimberg U, Lundquist LO: Gender differences in facial reactions to facial expressions. Biol Psychol 30:151-159, 1990

- Duhaney A, McKelvie SJ: Gender differences in accuracy of identification and rated intensity of facial expressions. Percept Mot Skills 76:716-718, 1993

- Ekman P, Friesen WV: Unmasking the Face: A Guide to Recognizing Emotions from Facial Expressions. Englewood Cliffs, NJ: Prentice Hall, 1975

- Fennell E, Mulheira M: Lateralized differences in recall of dichotic words and emotional tones (abstract). Neuropsychological Society, Pittsburgh, PA, 1982

- Ferrand C, Bloom R: Introduction to Organic and Neurogenic Disorders of Communication: Current Scope of Practice. Boston: Allyn and Bacon, 1997

- Gainotti G: Emotional behavior and hemispheric side of the lesion. Cortex 8:41-55, 1972

- George MS, Ketter TA, Parekh PI, et al: Gender differences in regional cerebral blood flow during transient self-induced sadness or happiness. Biol Psychiatry 40:859-871, 1996

- Grunwald IS, Borod JC, Obler LK, et al: The effects of age and gender on the perception of lexical emotion. Appl Neuropsychol 6:226-238, 1999

- Hamsher K, Levin HS, Benton AL: Facial recognition in patients with focal brain lesions. Arch Neurol 36:837-839, 1979

- Heilman KM, Bowers D, Speedie L, et al: Comprehension of affective and nonaffective prosody. Neurology 34:917-921, 1984

- Heilman KM, Scholes R, Watson RT: Auditory affective agnosia. Disturbed comprehension of affective speech. J Neurol Neurosurg Psychiatry 38:69-72, 1975

- Kolb B, Taylor L: Affective behavior in patients with localized cortical excisions: Role of lesion site and side. Science 214:89-91, 1981

- LaFrance M, Banaji M: Toward a reconsideration of the gender-emotion relationship, in Clark MS (ed): Emotion and Social Behavior: Review of Personality and Social Psychology. Newbury Park, CA: Sage Publications, 1992, pp 178-201

- Levenson RW, Carstensen LL, Friesen WV, et al: Emotion, physiology, and expression in old age. Psychol Aging 6:28-35, 1991

- Ley R: Dissociation of emotional tone and semantic content through dichotic listening (abstract). Canadian Psychological Association, Calgary, Alberta, Canada, 1980

- Ley RG, Bryden MP: Hemispheric differences in processing emotions and faces. Brain Lang 7:127-138, 1979

- Malatesta CZ, Izard CE: The facial expression of emotion: Young, middle-aged, and older adult expressions, in Malatesta CZ, Izard CE (eds): Emotion in Adult Development. Beverly Hills, CA: Sage Publications, 1984, pp 253-273

- Otta E, Folladore AF, Hoshino RL: Reading a smiling face: Messages conveyed by various forms of smiling. Percept Mot Skills 82:1111-1121, 1996

- Prigatano G, Amin K, Rosenstein L: Administration and Scoring Manual of the BNI Screen for Higher Cerebral Functions. Phoenix, AZ: Barrow Neurological Institute, 1995

- Prigatano GP, Pribram KH: Perception and memory of facial affect following brain injury. Percept Mot Skills 54:859-869, 1982

- Prigatano GP, Wong JL: Cognitive and affective improvement in brain dysfunctional patients who achieve inpatient rehabilitation goals. Arch Phys Med Rehabil 80:77-84, 1999

- Raskin SA, Bloom RL, Borod JC: Rehabilitation of emotional deficits in neurological populations: A multidisciplinary perspective, in Borod JC (ed): The Neuropsychology of Emotion. New York: Oxford University Press, 2000, pp 413-431

- Ross ED: The aprosodias. Functional-anatomic organization of the affective components of language in the right hemisphere. Arch Neurol 38:561-569, 1981

- Safer MA, Leventhal H: Ear differences in evaluating emotional tones of voice and verbal content. J Exp Psychol Hum Percept Perform 3:75-82, 1977

- St Clair J, Borod JC, Sliwinski M, et al: Cognitive and affective functioning in Parkinson’s disease patients with lateralized motor signs. J Clin Exp Neuropsychol 20:320-327, 1998

- Strauss E, Moscovitch M: Perception of facial expressions. Brain Lang 13:308-332, 1981

- Suberi M, McKeever WF: Differential right hemispheric memory storage of emotional and nonemotional faces. Neuropsychologia 15:757-768, 1977

- Sullivan S, Ruffman T: Emotion recognition deficits in the elderly. Int J Neurosci 114:403-432, 2004

- Tompkins CA: Right Hemisphere Communication Disorders: Theory and Management. San Diego: Singular Publishing Group, Inc., 1995

- Tucker DM, Watson RT, Heilman KM: Discrimination and evocation of affectively intoned speech in patients with right parietal disease. Neurology 27:947-950, 1977

- Yin RK: Face recognition by brain-injured patients: A dissociable ability? Neuropsychologia 8:395-402, 1970

- Zigler L, Prigatano G: Perception of facial affect after acute brain injury. BNI Quarterly 16(3):36, 2000